Are Multi-GPU Setups Like SLI and CrossFire Still Worth It?

SLI and CrossFire are both ways to set up an Nvidia multi GPU or AMD multi-GPU system—basically, you use more than one graphics card, like a video card dual setup, to boost your gaming performance or power up high-performance computing. Back then, if you wanted the best, you’d slap in two or even three GPUs and link them up. You’d brag about your SLI or CrossFire build to friends and maybe feel a little smug about those extra frames in your games.

These setups were big news for gamers and anyone who needed extra power, like folks working with heavy programs or doing big computer jobs. Running more than one graphics card with Nvidia SLI or AMD CrossFire could mean much smoother gameplay or faster work in certain apps. Some games and programs even gave you almost double the speed—at least, that’s what the box used to say.

But here’s the thing: it’s not like that anymore. These days, it’s rare to see people running a multi-GPU configuration for gaming. Most new games don’t even support SLI or CrossFire, and you run into scaling problems and driver headaches. Even adjusting your graphics settings can’t always fix those issues. It kind of feels like the tech world moved on. Now, graphics cards are so powerful that just one card can handle almost anything you throw at it.

So, is it still smart to chase after those multi-GPU builds? Are SLI and CrossFire even worth thinking about in 2025? Honestly, I’m not sure they make sense for most people now. That’s what I keep wondering—maybe it’s time to take a fresh look at why we even wanted more than one GPU in the first place.

A Brief History of Multi-GPU Technology in Gaming

Back in the late 1990s, if you wanted real power for your games, you’d hear about something called the 3dfx Voodoo2. This was one of the first cards to let gamers link two graphics cards together using a method called Scan-Line Interleaving—a true double graphics card setup at the time.. It sounds kind of wild now, but people were blown away by what a “multi-GPU configuration” could do at the time. Games looked smoother, and everyone wanted to try it, even if it meant fiddling with a bunch of cables.

After that, Nvidia stepped in and bought 3dfx. By the mid-2000s, Nvidia SLI evolution really picked up speed. Suddenly, if you had two matching Nvidia cards, you could team them up and get way more gaming performance than before. It felt like something out of the future—just double up, and your frame rates jump. Not long after, AMD came out with its own answer: CrossFire. That kicked off a kind of “GPU arms race,” and gamers everywhere started talking about which multi-GPU setup was better.

From around 2008 to 2015, these setups got super popular. The gaming world was moving fast—everyone wanted higher resolutions like 1440p or even 4K, and a single card couldn’t always keep up. Multi-GPU builds using SLI or CrossFire became almost normal for anyone who cared about max settings. It was cool, but it wasn’t perfect.

People kept running into micro-stuttering issues, where the game would freeze for a split second over and over. Driver problems made things even trickier. Sometimes, you’d get a new game and have to wait weeks for a fix just to make both GPUs work right. Not every game engine was built for multi-GPU either, so some titles never worked well no matter what you tried—issues also common in GPU Bottlenecks in Gaming caused by unbalanced hardware.

The industry started shifting. Game engines got more complicated and didn’t always play nice with more than one GPU. Around this time, DirectX 12 and Vulkan came out and promised multi-adapter support, so you could, in theory, use any GPUs together. But honestly, most companies stopped focusing on it.

It’s just easier for them—and for us—when a single powerful GPU does the job. Now, most of the multi-GPU history is just that: history. Today, you’ll mostly see multi-GPU setups in professional or workstation spaces, like for scientific computing or rendering big video projects.

Gaming’s moved on, but you can still spot echoes of SLI, CrossFire, and even the old 3dfx Voodoo2 if you look close. And who knows, maybe we’ll see something like it again—but for now, it’s mostly a cool story from the past.

Understanding Nvidia SLI and AMD CrossFire Technologies

Both are ways to set up a multi-GPU configuration, letting you connect two, three, or even four graphics cards—like quad SLI or triple SLI builds—in one computer for better performance. You can’t just use any motherboard or any power supply, though. You need a board that supports these setups and a PSU strong enough to handle all those cards at once.

Graphics card compatibility matters, too—only certain models and brands work together for SLI or CrossFire. Plus, you have to use special software to make sure your computer knows how to run the cards side by side. All the cards have to talk to each other, which can get tricky.

But here’s something you should know: support for Nvidia SLI and AMD CrossFire is fading fast in new GPUs. Most of the latest graphics cards just don’t play nice with these old multi-GPU tricks. Also, even if you set it all up right, you still need games or apps that actually know how to use more than one card. If they don’t, your fancy setup might not help at all.

The Rise and Decline of Multi-GPU Setups

There was a time—right around the mid-2010s—when it felt like everyone wanted two or more graphics cards in their gaming PC. People talked a lot about getting better frame rates and bragging about their powerful setups. Multi-GPU builds using graphics card SLI or AMD CrossFire were all over YouTube and forums. Enthusiasts compared Nvidia dual GPU configurations, trying to squeeze out every frame. I even remember a friend who couldn’t stop tweaking his rig to get every last bit of performance. For a while, multi-GPU setups seemed like the best way to max out your gaming experience.

But things started to change. Here’s what really brought the multi-GPU performance decline:

- Performance wasn’t steady—sometimes, games ran better, sometimes they didn’t

- Power consumption GPUs shot up—lots of heat, lots of noise

- Prices for new GPUs kept climbing, so two cards got way too expensive

- Game and software makers didn’t always bother to support more than one GPU

- Nvidia SLI discontinuation for the RTX 20 and 30 series, and AMD CrossFire end as they moved to Infinity Cache technology

After a while, even the big GPU brands saw the writing on the wall. Nvidia started to drop SLI support, first for most new cards and then almost entirely for their top lines. AMD did pretty much the same thing, switching focus to Infinity Cache and single, super-strong GPUs. A lot of this shift also came because DirectX 12 and Vulkan allowed for “explicit multi-adapter” setups, but hardly any games or apps really used it. Plus, these days, one powerful GPU can give you better performance per watt than any old multi-GPU build. So, vendors just stopped pushing the idea, and honestly, most people stopped caring about it, too. It’s kind of wild how fast things changed, but I get why nobody really wants to mess with those setups anymore.

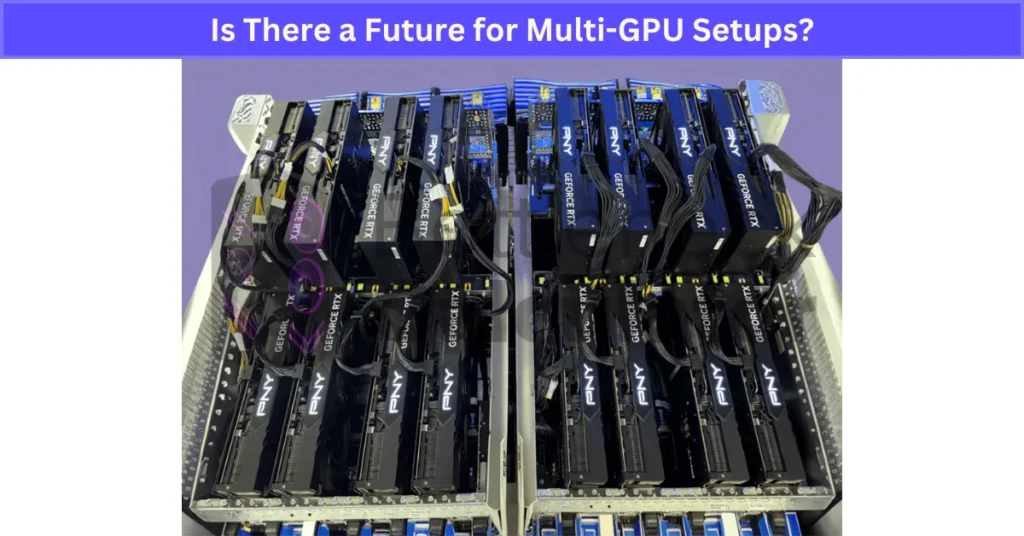

Is There a Future for Multi-GPU Setups?

If you’ve been following graphics cards the last few years, you probably noticed Nvidia SLI support and AMD CrossFire status have faded away for most regular users. The big brands used to brag about running two or even four GPUs in one machine, but these days, official support is rare. You don’t see many new games or hardware releases making a big deal about multi-GPU setups anymore. I kind of miss those times, but it’s clear the industry moved on.

Still, multi-GPU isn’t totally dead. I’ve seen it hanging on in professional spaces, like machine learning labs, big data analysis, and 3D rendering. In those cases, running lots of GPUs together can really speed things up, way more than just using one. Some folks building high-end home setups—super passionate “enthusiasts”—also still tinker with multi-GPU configurations. But let’s be honest, that’s not what most gamers are doing today.

There’s talk about new tech making multi-GPU better in the future. PCIe 6.0 multi-GPU setups promise much faster communication between cards, and things like NVLink and Infinity Fabric could let GPUs work together more smoothly. If we ever get true unified memory pooling, where all the cards share one giant memory bank, maybe a multi-GPU configuration could act almost like a single, giant GPU. That’s a cool idea, at least on paper.

Some niche games and special apps still support multiple cards using DirectX 12 multi-adapter support or Vulkan’s similar tools. I’ve heard a few stories where someone gets a real boost by mixing different cards or using every bit of hardware they have. But those are pretty rare cases, and you usually need to spend hours tweaking settings to get it right.

Here’s the real roadblock: multi-GPU gaming challenges are everywhere. High GPU prices make it super expensive to buy two (or more) good cards. Power and heat are major issues, too—your PC can sound like a jet engine, and you might need special cooling just to keep things running. Plus, multi-GPU cards take up a ton of space, so you’d need a huge case and a beefy power supply.

Then there’s the software side. Game developers don’t really have much reason to support multi-GPU anymore, and it adds a ton of complexity to their work. Most games just aren’t built to split graphics jobs between multiple cards, so you end up with problems instead of better gameplay. Even when new APIs like DirectX 12 make it possible, not many devs want the extra hassle. That’s a big part of why Nvidia SLI support and AMD CrossFire status are pretty much gone for most gamers.

Honestly, if anything’s going to shake things up, it might be cloud gaming or GPU virtualization. Instead of cramming a bunch of cards into your own PC, you could just rent a bunch of power from a server farm somewhere. That way, all the crazy power and heat issues are someone else’s problem, not yours. Also, companies keep working on power efficiency innovations, which might make running more than one card less of an energy drain someday.

But if you ask me, probably not—at least for most people. Multi-GPU setups are still cool for pros and folks doing big projects, but for gaming? I’d say single cards are just easier, cheaper, and work better right now. I don’t see that changing soon, unless something really big happens in the tech world.

Where Multi-GPU Setups Still Make Sense Today

Multi-GPU setups aren’t gone—they just moved to special jobs most regular folks don’t do.

- Multi-GPU professional use: Stuff like 3D rendering, big video editing, and scientific computing GPUs in labs still need lots of power. You’ll see multi-GPU setups in AI research labs for deep learning training, too.

- Cryptocurrency mining GPUs: This isn’t as popular as it was, but some miners still run big farms with lots of cards working together.

- Overclocking multi-GPU: There’s a small group of enthusiasts who chase world records. They use multi-GPU setups just to push hardware to the limit and see how high the numbers can go.

- Cloud computing: Some companies use huge multi-GPU clusters in the cloud, so people can rent serious graphics power for big projects.

Honestly, these setups are rare for gaming now. Unless you’re working in one of these fields or just love tweaking hardware, a single card is usually enough.

Potential Bottlenecks in Multi-GPU Configurations

Running more than one graphics card sounds awesome, but a multi-GPU bottleneck can sneak up on you fast. When you add extra GPUs—whether you’re running a video card dual setup or a more ambitious quad SLI build—you’re also adding a lot more data traffic between the cards. The system has to keep all those GPUs in sync, which isn’t always easy. As soon as the communication gets heavy, the whole setup can slow down.

A big issue is GPU communication latency. All that data has to travel back and forth between the cards, and sometimes it just takes too long. If one GPU is waiting for another to finish its work, everything starts to lag. These system performance bottlenecks can actually make your fancy multi-GPU build run slower than you’d expect.

PCIe lane limits can make things even worse. If your motherboard doesn’t give each GPU enough bandwidth, multi-GPU data transfer turns into a traffic jam. Plus, the software side has to work hard to make sure each card knows what to do and when to do it. If the drivers or the game engine aren’t perfectly tuned, you end up with even more problems. So, a lot of times, the biggest challenge isn’t just having powerful hardware—it’s making sure everything works together without getting in its own way.

How Bandwidth and Memory Affect Multi-GPU Performance

Every graphics card in a multi-GPU setup comes with its own chunk of memory, called VRAM. In multiple GPU configurations, especially with Nvidia multi GPU systems, VRAM is not shared, so data has to be duplicated. So, if you’re running two cards, each has its own separate memory. The thing is, in most traditional setups, whatever game or app you’re running needs to store the same stuff on each GPU’s VRAM. That means there’s a lot of VRAM duplication across GPUs. You don’t actually get to add all the VRAM together, which surprises a lot of people at first.

Here’s where multi-GPU bandwidth really starts to matter. If your system can’t move data fast enough between the cards, you get bottlenecks. The more you ask from your GPUs—like playing games in 4K or using fancy effects—the more bandwidth and memory you need. If the memory can’t keep up, or the connection between cards is slow, you’ll notice a system performance drop. Sometimes, things just freeze for a second or frames get super choppy.

That’s why good memory handling is so important in multi-GPU setups. Poor management means too much demand on VRAM or slow data transfer, which really drags down performance. Some new tech, like unified memory architectures, tries to fix this by letting all the GPUs share one big pool of memory. But for most setups right now, bandwidth and VRAM limits are a big reason why multi-GPU bottlenecks happen.

Impact of Power and Cooling on Multi-GPU Performance

Adding more graphics cards means a big jump in multi-GPU power consumption. Each GPU adds to the total load your power supply needs to handle, so power supply requirements go up fast. All those cards working together also pump out a ton of heat—GPU heat generation can get out of control if you’re not careful.

That puts a lot of stress on your PC’s cooling in multi-GPU setups. If things get too hot, the GPUs can hit thermal throttling, which slows everything down to keep temperatures safe. I’ve seen people run into stability issues because their PSU just wasn’t strong enough. Modern GPUs have a high thermal design power (TDP), and when you stack them, you really need serious airflow or even liquid cooling to keep bottlenecks from wrecking your performance.

Challenges in Software and Hardware Compatibility for Multi-GPU Systems

Making a multi-GPU setup work isn’t just about plugging in more cards. You need true multi-GPU compatibility between the GPUs themselves, your motherboard, the software, and any deep learning frameworks you want to use. If you mix brands or even different models, things can break or slow down.

With deep learning and scientific work, GPU resource distribution becomes a real headache. The system has to split jobs between all the cards, but if the software doesn’t balance things right, you get a big multi-GPU bottleneck—some cards end up waiting while others work too hard.

Driver and API support is super important here. You need updated drivers and tools like CUDA or ROCm to manage how everything talks to each other. Even then, it’s easy to run into problems if your hardware and software aren’t perfectly matched. Hardware-software coordination is a big challenge, especially for big projects or research.

Some pros use virtualization or containerization to help make everything work together better, but that’s another layer of complexity. So, when things aren’t set up just right, multi-GPU bottlenecks and crashes can happen fast.

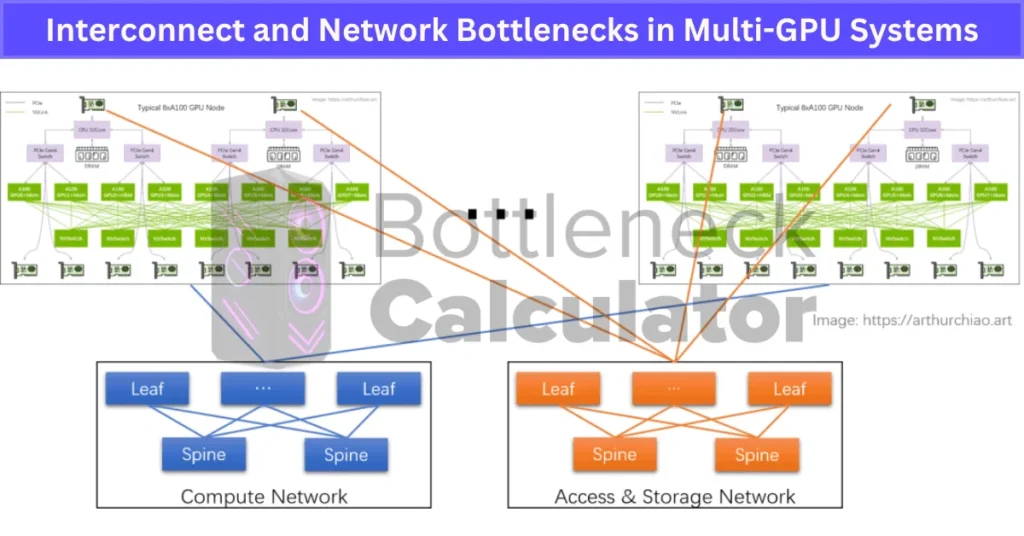

Interconnect and Network Bottlenecks in Multi-GPU Systems

When you build a multi-GPU system, how the GPUs and CPU talk to each other makes a big difference. The most common connection is PCIe, but PCIe bandwidth limitations can turn into a major headache. If you’ve got four GPUs and not enough PCIe lanes, those cards end up fighting for data, slowing everything down. Even with powerful CPUs, if the PCIe slots don’t have enough bandwidth, the data just can’t move fast enough between the GPUs and the CPU. This is where you start to feel the pinch—every card has to wait its turn, so your system can’t work as fast as you’d hope.

There’s also something called NUMA effects, which pop up in systems with lots of processors or when you’re running multi-GPU setups across different sockets or platforms. NUMA stands for Non-Uniform Memory Access. It basically means not all GPUs can reach memory at the same speed. Some cards get data faster than others, causing asymmetric memory access delays. When one GPU gets stuck waiting for info, while another races ahead, you get uneven performance. It’s like having a team where one person has to run upstairs for every tool while everyone else has one right at their feet.

Put these issues together and you get real GPU data transfer latency and a classic multi-GPU network bottleneck. The more GPUs and CPUs you throw into a system, the harder it gets to keep data moving smoothly. Transfers slow down, response times go up, and the whole system can lose efficiency. Some new tech helps—PCIe 5.0 and PCIe 6.0 have way more bandwidth, so that helps move data faster.

Nvidia NVLink and AMD Infinity Fabric are even better, giving cards more direct, speedy ways to share info. But for most setups, PCIe lane limits and memory access issues are still big reasons why multi-GPU interconnect bottlenecks happen and why system performance doesn’t always match the raw power you might expect.

Effective Strategies to Overcome Multi-GPU Bottlenecks

Getting around multi-GPU bottlenecks takes both smart hardware and clever software tricks. You can’t just toss in more GPUs and hope for the best—it takes a plan.

- Use NVLink interconnect or InfiniBand: These are way faster than plain old PCIe, so GPUs can talk to each other with less lag and lower communication overhead.

- Try memory compression GPU features and smart caching: This helps you get around bandwidth and VRAM limits, making sure each GPU can handle more without slowing down.

- Pick power-efficient GPUs with dynamic voltage and frequency scaling: This lets your system save power when possible and stay cool, so you don’t run into as many heat or stability issues.

- Work on multi-GPU software optimization: You need good drivers and apps built to spread jobs across cards smoothly—without this, even the best hardware can bottleneck.

- AI-driven resource management: New tools are using AI to shift workloads on the fly, keeping things running smoother in tough multi-GPU setups.

Industry best practices and new research are making multi-GPU bottleneck solutions better all the time. If you keep up with both hardware upgrades and software improvements, you can get more power out of your system and avoid the usual slowdowns.

Current Alternatives to Traditional Multi-GPU Setups

The idea of using two or more graphics cards together has lost steam. Instead, the focus has shifted to making single, really powerful GPUs that can handle everything on their own. These days, cards like the Nvidia RTX 3090 or the AMD RX 6900 XT pack enough punch to match or beat what older multi-GPU setups used to offer.

Here are some modern alternatives making big waves:

- High-end single GPU: These cards deliver top-tier gaming performance without the hassle of linking multiple GPUs. They handle 4K gaming and VR with ease.

- DLSS technology (Nvidia) and FidelityFX Super Resolution (AMD): Both use AI-driven upscaling to boost frame rates without needing extra hardware. This means smoother gameplay even on demanding titles.

- Real-time ray tracing: This feature adds realistic lighting, shadows, and reflections to games. Supported by recent GPUs, it makes visuals way more immersive without extra GPUs.

Thanks to these advances, you don’t need to juggle multi-GPU complexity anymore. Plus, ongoing improvements like DLSS 3 and FSR 2.0 promise even better performance down the line. For gamers, especially those into VR or 4K, these tech upgrades mean better graphics and smoother play without multi-GPU headaches.

Why Multi-GPU Setups Are Unlikely to Make a Gaming Comeback—But Not Impossible

I’d say a real multi-GPU gaming comeback is pretty unlikely, at least for the next few years. Single GPU performance keeps getting better and better, and power efficiency is improving too. Because of that, most gamers don’t need to mess with multiple graphics cards anymore. It’s easier, cheaper, and less complicated to just buy one strong GPU. Plus, running two or more cards comes with big challenges—extra cost, more heat, and a whole lot of setup headaches. Not every game supports multi-GPU either, so even if you invest in it, you might not see the boost you expect.

That said, some tech advances are slowly changing the landscape. API improvements for multi-GPU setups, faster interconnects between GPUs, and ideas like unified memory help reduce some old problems. These make it easier for software and hardware to work together, which could keep multi-GPU relevant in some cases. Still, motherboard multi-GPU support remains a hurdle. Not all boards handle multiple GPUs well, and power supply demands skyrocket with each card added, especially when deciding between Nvidia vs AMD GPUs with different architecture support.

Multi-GPU is still alive in productivity fields—scientific computing, AI research, and video rendering often use many GPUs for serious work. Some people also experiment with combining older GPUs creatively to save money as new GPU prices rise. Cloud gaming and streaming might reduce the need for local multi-GPU setups even more, as you rent power from remote servers instead.

So, while I don’t see multi-GPU setups making a big splash in gaming soon, I wouldn’t say it’s impossible. Niche uses and tech improvements might keep it around, just not in the mainstream where single, powerful GPUs rule.

Real User Fixes & Community-Backed Solutions

Quora

We found that using SLI or CrossFire can improve performance if both GPUs are properly supported and balanced, but it’s often hit or miss. Many games don’t support multi-GPU setups well, and even when they do, performance gains are usually around 30–50%, not double. If one GPU bottlenecks the other, you’ll see less benefit. Additionally, multi-GPU setups tend to have more driver issues, higher power use, and compatibility problems with newer titles. Most experts now recommend investing in a single, more powerful GPU instead of relying on SLI or CrossFire, as support and real-world benefits for multi-GPU have declined significantly.

We found a detailed discussion on why the industry abandoned SLI and CrossFire multi-GPU setups. The main reasons include poor game support, complicated driver requirements, and the fact that GPU memory (VRAM) cannot be shared between cards—only processing power is combined. This means each card duplicates textures in its own memory, limiting efficiency. Even when supported, performance gains were often limited to 30–50% rather than doubling. Additionally, companies like Nvidia have little incentive to support multi-GPU setups that could undercut sales of their top single GPUs. Users also recalled issues like microstuttering and frame pacing problems that made multi-GPU gaming feel worse despite higher frame rates. Overall, software and hardware challenges plus business reasons led to the decline of SLI and CrossFire.

TechPowerUp

We found a detailed forum discussion on TechPowerUp about why the dual-GPU era and multi-GPU solutions like SLI and CrossFire effectively died out since around 2013–2014. The key reasons include:

- Business incentives: GPU makers like Nvidia and AMD make more profit selling high-end single GPUs rather than encouraging users to buy two cheaper cards that undercut flagship sales.

- Technical challenges: Multi-GPU setups require heavy driver support and game developer optimization, which was rarely well implemented. Issues like microstuttering, poor frame pacing, and duplicated VRAM (each card needing its own memory copy) limited performance gains.

- Limited game support: Very few games properly supported SLI/CrossFire, and newer APIs like DirectX 12 introduced multi-GPU features that rely on developers, but adoption remains minimal.

- Resource allocation: Companies decided to focus engineering efforts on improving single-GPU performance rather than maintaining complex multi-GPU tech for a small user base.

Users nostalgically recalled powerful dual-GPU cards like the HD 7990 and GTX 690 but acknowledged that despite raw specs, multi-GPU scaling was inconsistent. Overall, both business strategy and persistent technical hurdles led to the decline and near disappearance of consumer multi-GPU solutions.

Final Verdict

Looking at where we are in 2025, SLI and CrossFire just don’t make sense for most gamers anymore. The days of doubling up GPUs for big frame rate boosts are long gone. Today’s single graphics cards are powerful enough to handle 4K, VR, and all the latest effects on their own, without all the setup headaches, driver issues, and random game crashes that came with multi-GPU builds. Game support for SLI and CrossFire is basically gone, and even when it works, you only get partial gains, not double the performance. It’s just easier, cheaper, and way less stressful to buy one strong card and skip the hassle.

That said, multi-GPU setups still have a place for pros working on AI, deep learning, and big rendering projects, but for regular gaming or even most enthusiast builds, they’re more history than future. The smart move for anyone building or upgrading a gaming PC right now is simple: invest in the best single GPU you can afford. You’ll get smoother gameplay, fewer problems, and a much better experience overall.

FAQ’s

Does SLI still work in 2025?

Starting January 1, 2021, Nvidia announced that SLI technology support would continue only for RTX 2000 series and older cards. However, SLI profiles are no longer updated, meaning newer games and applications don’t benefit from SLI capabilities anymore.

Are CrossFire and SLI dead?

SLI and CrossFire are relics of the past with no real use in 2024. Both technologies have been buried in the tech graveyard and are basically discontinued.

Is SLI still usable?

SLI support ended starting with the 3000 series GPUs, and even then, most games didn’t support using two GPUs properly, so only one card was active. Today, multi-GPU setups are mainly used for AI tasks and rendering workloads, not gaming.

Do people still use multiple GPUs?

Now, most people are happy with just one powerful GPU. It gives smoother performance, less noise, and fewer compatibility problems. Dual or multi-GPU setups still exist but are mostly for niche uses, as simpler setups have taken over.

Is AMD CrossFire still a thing?

AMD’s related technology for mobile computers with external graphics cards is called AMD Hybrid Graphics. Although the CrossFire brand was retired in September 2017, AMD still develops and supports the technology for DirectX 11 applications.

Can I use a 2 rtx 3060 for gaming?

A 2-slot design boosts compatibility and cooling efficiency, delivering better performance even in small chassis. With 0dB Technology, you can enjoy light gaming quietly without fan noise.